How to create an A/B test campaign ?

Last updated November 4, 2025

What is A/B testing?

A/B testing, also known as split testing, is sending 2 different versions of your email to your sample contacts in the email lists/segments to see which version performs better. The version that receives more engagement is then sent out to the rest of your contacts. You can read more about A/B testing here .

✨ Powered by Mailmodo AI Mailmodo AI automatically analyzes your past campaigns and recommends which element (subject line, content block, or CTA) to test for better performance. It also predicts the winning version based on historical engagement trends. Try it out today →

In this article, you'll learn how to create an A/B testing campaign in Mailmodo.

Your A/B test campaigns will be created in a series of steps. You start off with deciding what you want to test e.g. subject line or content and then select your lists/segments. You then set up the criteria to automatically decide which version should be selected as the winner and then you review and send the campaign.

Step 1: Start with your A/B test creation

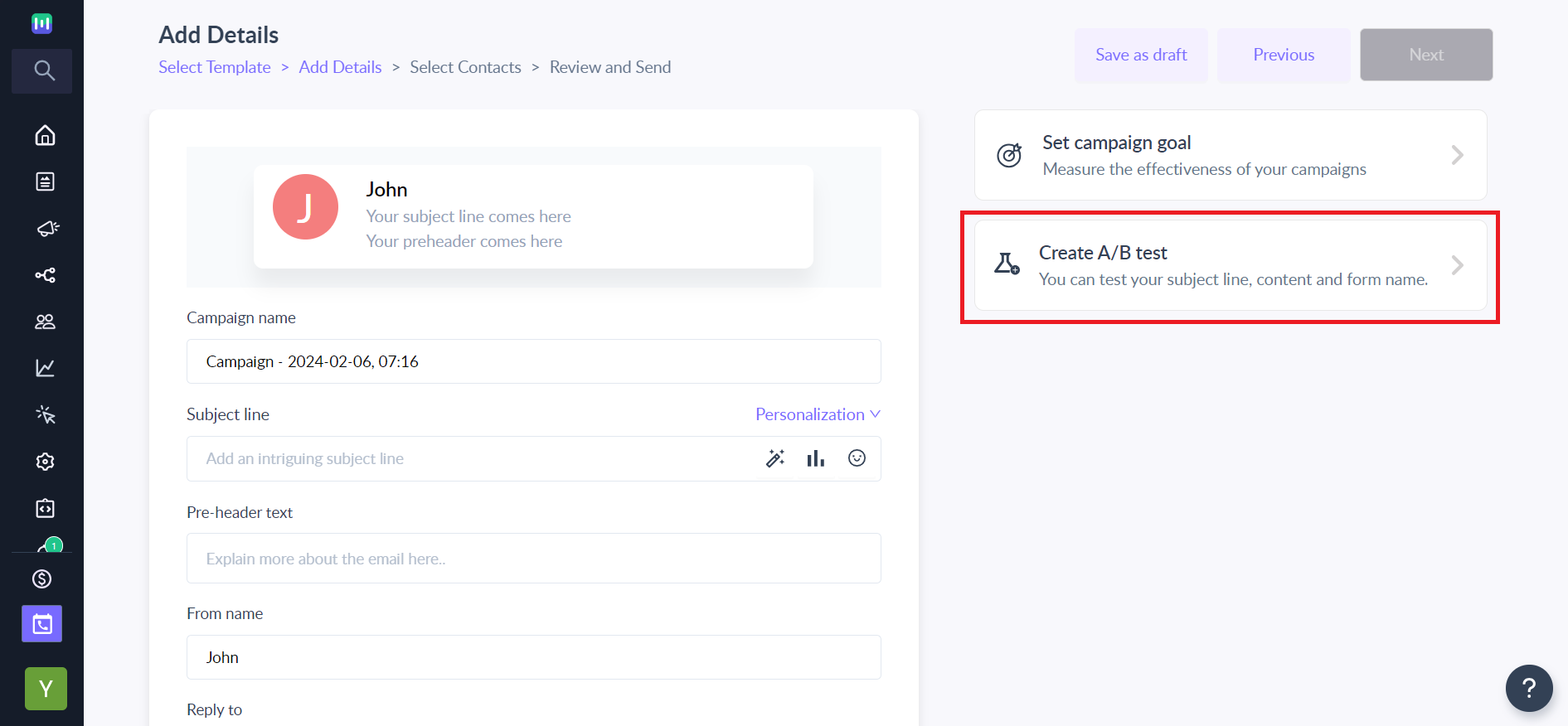

After selecting the template and entering campaign details, to begin creating your A/B test campaign, click Create A/B test at the bottom of the page

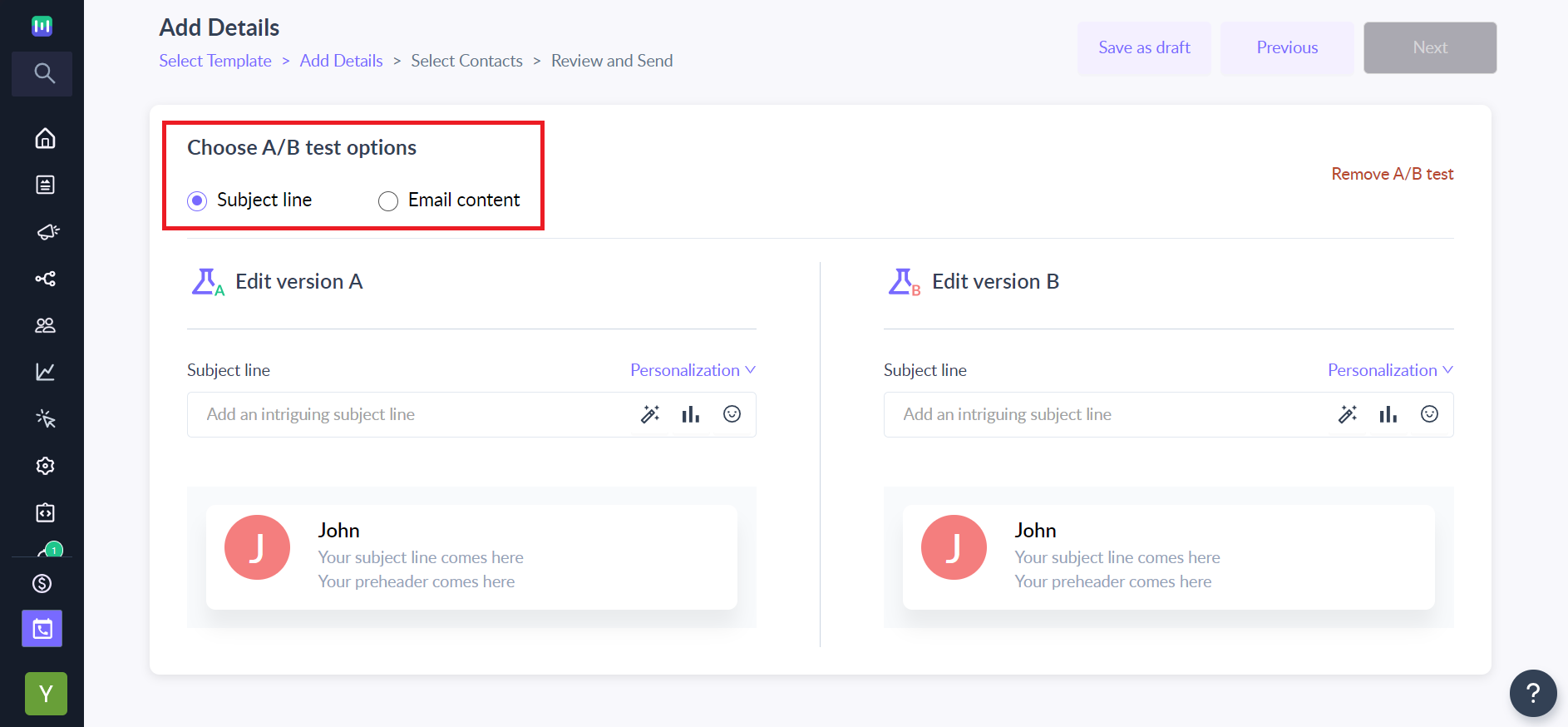

Step 2: Choose what you want to test

You can select to test any of the variables i.e. subject line or content. If you choose content A/B testing you will be able to select 2 templates - Version A and Version B. While doing content A/B testing, we recommend preparing your template versions in advance so that you can select them easily here.

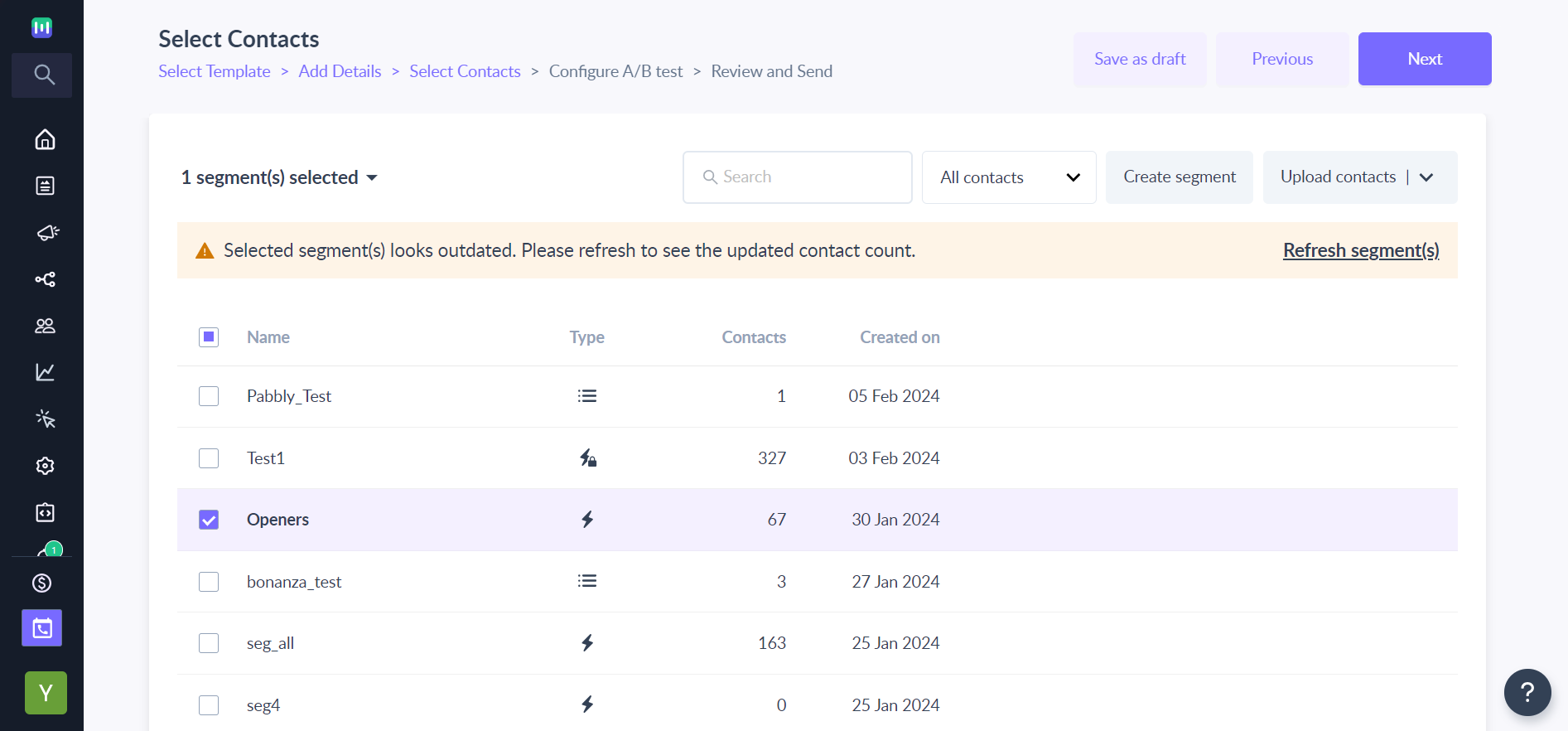

Step 3: Select your contacts

Select the segments/lists for your campaign and move to the next step to configure.

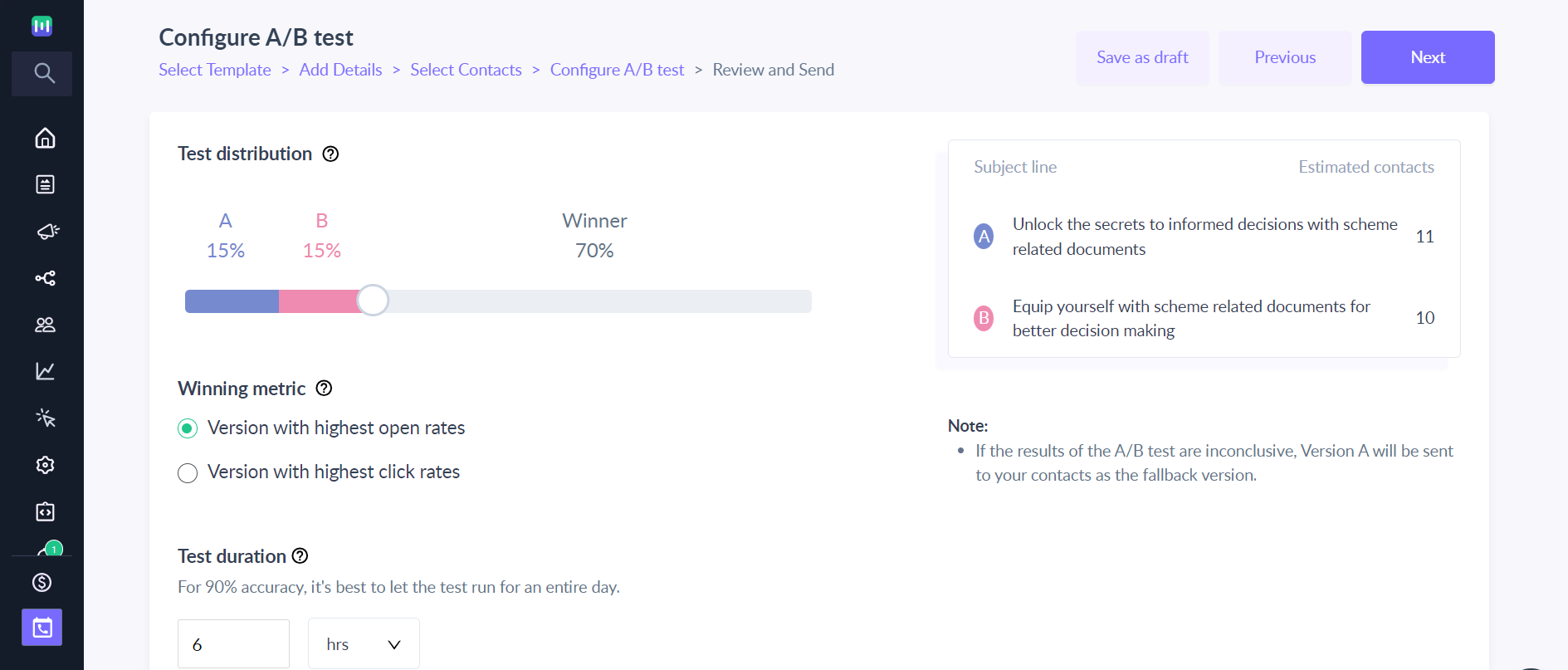

Step 4: Decide the winning criteria for your A/B test

In this step, you configure the sample size of your contacts that will receive your A/B tests, the winning criteria, and the time for which your A/B test should run before deciding the winner automatically.

You can choose the winning metric as Open rate or Click rate based on your test. In case of a tie, we always select Version A as a fallback winner.

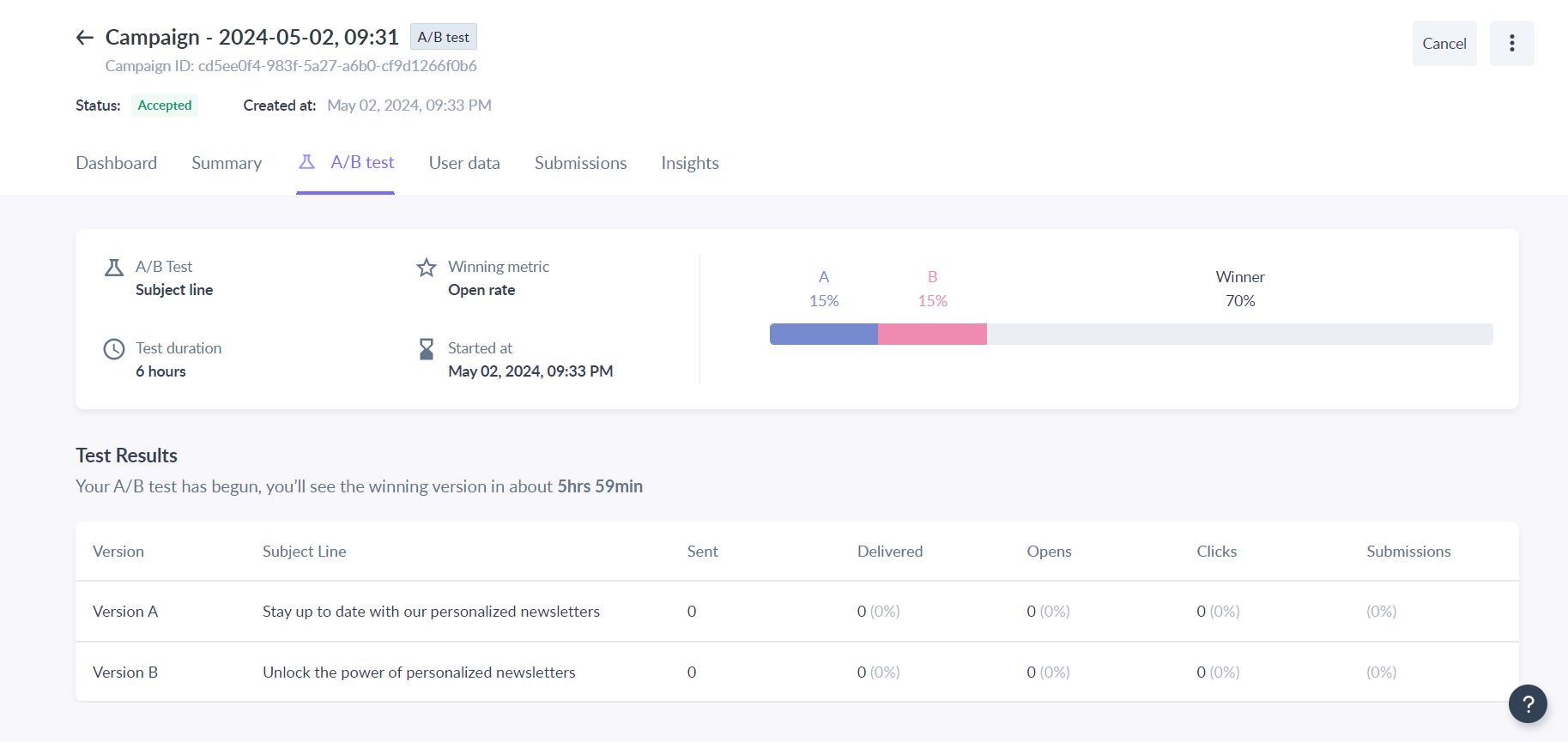

You can review the campaign details and test the emails in the next step and schedule your A/B test campaign successfully. Once the campaign is scheduled, you will start seeing the A/B test performance and winner for your campaign in the A/B test dashboard.

If you have any queries then reach out to us at Mailmodo Support or share your thoughts at Mailmodo Product Feedback .